Does Dynamic Application Security Testing (DAST) Deliver?

The idea behind Dynamic Applications Security Testing (DAST) is pretty clever — a tool that simulates a human penetration tester. With the URL of an app to test, the tool gets its hands dirty and provides a vulnerabilities report.

DAST tools are not just contextless fuzzers; they have intelligence and decision-making capabilities which help them show more interesting results.

As a security engineer, I have used DAST multiple times, and have been curious about these tools that threaten to take my job. I spent some time reverse engineering some of them in order to understand better how they work and what I can learn from them.

This article discusses different components of DAST tools, how they work together, and challenges they face in the modern development environment.

Behind the scenes

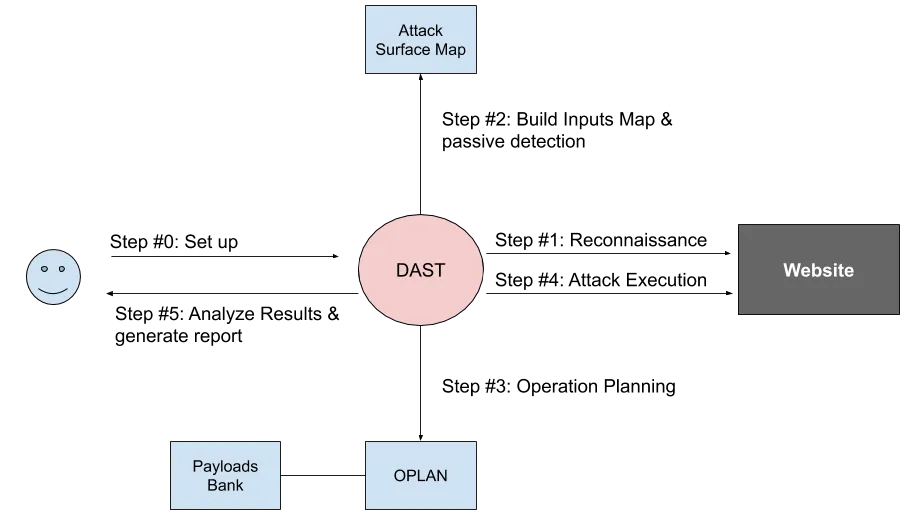

Let’s jump into the underlying mechanism of a Dynamic Applications Security Testing tool:

Step 0 - Setup

The “operator” (usually someone from the AppSec team or a pentester), builds the scan profile including the URL to test, credentials, exclusions, and the like.

Step 1 - Reconnaissance

The DAST tool starts flirting with the app, asking many questions to get to know it better. The goal is to access as many entry points as possible and understand how to use them. There are different ways to accomplish this:

- Web spider. Works best with multi-page applications, Spiders scan HTML files for links, accessing each one in a recursive way.

- Client-side files analysis. Analyzes the client files (JavaScript, webapps, iOS ipa apps, Android APK apps) to find entry points.

- Name guessing. Uses dictionaries to look for existing entry points (dirbuster) or just pure brute force [aaaaaaaa-zzzzzzzz style]

- Spec import. Imports documentation, sitemap, or API specs/contracts (OpenAPI, WADL, etc) to find entry points.

Step 2 - Building the Attack Surface Map:

At this point, the tool should have gathered a decent amount of traffic to analyze. Using this data it can learn different things:

Inputs Map

Remember: every input sent to the app is a potential place to attack. The goal here is to create a map that describes where entry points exist and how to feed them with input.

Entry pointRequest TemplateGET /top_users.aspx[Query] index=<input1>POST /login.aspx[Body] user_name=<input2>&password=<input3>PUT /rest/update_pass{“new_pass”:<input4>,”username”:<input5>}

App Metadata

Based on fingerprinting techniques it’s possible to make educated guesses about the technologies and frameworks used by the tested app. For example, if all entry points have an extension of “.aspx”, we can assume the app is written in .NET and not PHP.

Passive Vulnerability Detection

This is the bread and butter of many security solutions. It’s possible to detect vulnerabilities without generating any malicious traffic! For example, you can find that, based on the port number, a website doesn’t implement encryption communication, or that the application server is unpatched and has a public CVE assigned based on the server header in the response.

Step 3 - Operation Planning

Payloads Bank

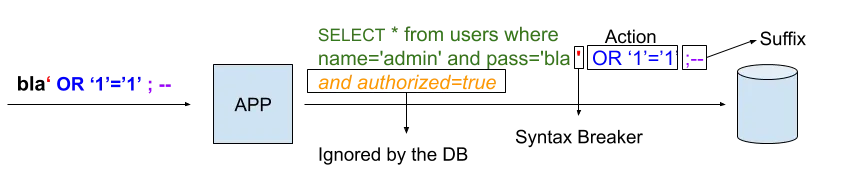

The secret sauce of DAST tools is an extensive list of payloads for different vulnerabilities. Let’s clarify a few terms before jumping in.

Payload

A payload is a string that tries to exploit a vulnerability and cause malicious behavior. For example, "<script>alert(21)</script>" is a payload that tries to exploit an XSS and trigger an alert box. Most DAST payloads contain 2-3 components:

- Syntax Breaker. A special character to break the syntax of a query/command/code.

- Brain. What actions will the payload take? Some actions are subtle like “console.log()” in the case of XSS, while others are extreme like “format c: /fs:ntfs” in the case of shell injection.

- Suffix. Sometimes we want to protect our payload from additional commands that might be concatenated to it and make sure it runs without disruption.

Exploitation Success Criteria

Once we send a payload it’s not always clear whether the payload triggered a real vulnerability or didn’t cause any harm. The way to approach this problem is by creating payloads with generic “brains” that trigger noticeable actions. A few common examples:

- Time Based. In the case of injections, the brain will call a function that causes a delay of 10 seconds. If the HTTP response is delayed by 10-20 seconds, it’s safe to assume the payload triggered a real vulnerability. If the response was instant, , the payload probably didn’t trigger a real vulnerability.

- Error Based. We can send a payload that will violate the syntax of the framework and cause an error. If the response contains a string that describes a known framework error, it’s safe to assume there’s a vulnerability.

Keeping this list updated is an endless, tedious job that requires security researchers to always be up to date with recent framework changes, and to think about new ideas for how to create noticeable payloads.

Let’s say we want to create a SQLi payload that causes a delay. Sounds straightforward? Not really.

First, different SQL databases have unique “sleep” commands. A short list:

- Oracle: dbms_lock.sleep(3)

- MSSQL: WAITFOR DELAY '00:00:10'

- Postgres: pg_sleep(10)

And you better have time-based payloads to cover all of them!

Second, what happens if the database administrator disabled the SLEEP command?

Some hacks cause delays even without using the command.

Or, in the case of shell injection, what happens if the bash command “sleep” isn’t supported for some reason? You can use the command “ping -c 10 localhost” to cause a delay.

This is just the tip of the iceberg, but the point is there are hundreds of frameworks to support, and multiple techniques to cause a delay in each one of them. And causing a delay is just one method to validate a vulnerability.

OPLAN

Once you have an extensive payloads bank, you’re only halfway there.

Attack planning is about taking the right payloads from the payloads bank and blending them into the Request Templates from the Attack Surface Map. This creates malicious ready-to-use HTTP requests. This process should consider the content type (where to place the payload in the request and how to encode it), parameter names, and metadata we collected about the app.

- If an endpoint is a REST API that returns JSONs, it doesn’t make sense to include reflected XSS payloads.

- If an app is written in .NET, it doesn’t make sense to include Ruby-specific vulnerabilities.

Targeted Entry pointTargeted InputTested VulnerabilityPayloadSuccess CriteriaPUT /rest/update_passInput4 [new_pass]SQLi #1 - time based [MSSQL]PUT /rest/update_pass

{“new_pass”:”; WAITFOR DELAY '00:00:10';”}HTTP_Response.time is delayedPUT /rest/update_passInput4 [new_pass]SQLi #99 - Error basedPUT /rest/update_pass

{“new_pass”:”; ‘ fsdfsdf--- ”}HTT_response.body contains “error”PUT /rest/update_passInput4 [new_pass]Command Injection #1 - time basedPUT /rest/update_pass

{“new_pass”:” \” && sleep 10 ”}HTTP_Response.time is delayedPUT /rest/update_passInput4 [new_pass]Command Injection #99 - time based [LINUX]PUT /rest/update_pass

{“new_pass”:” \” && curl http://scanner_collaborator.com/scan_232 ”}Check access log of collaborator server

Step #4 - Attack Execution

We have an operation plan, we know how we want to attack, and which weapons to use. Now it’s time to sit in the war room, send the soldiers to the field, and monitor their progress.

Even though we already know which entry points we want to attack and which payloads to use, we might face some unplanned events:

- IP address got blocked

- Website returns only errors (We might have crashed the server or perhaps got blocked by a WAF?)

- Website responds too slowly (We might want to send fewer calls per minute to avoid causing Denial of Service

Some of the action items regarding what to do in these cases can be configured by the operator before scanning, and some might be controlled by the DAST tool itself based on live traffic.

Step #5: Analyze results and generate report

After the operation is complete it’s time to analyze the traffic and logs to figure out which payloads triggered real vulnerabilities and which ones didn’t work. The resulting report explains which vulnerabilities were found and how to fix them.

Now that we’ve explored how DAST tools work, let’s make some judgements.

The Advantages of DAST

- High risk-accuracy. Because the tool actually exploits the vulnerability it discovers, it’s more likely to display real vulnerabilities that can be exploited. This is as opposed to vulnerabilities that exist in the code but can’t be exploited from the outside.

- Minimal user interaction. Once the operator sets up a scan, they can just chill and wait for the results.

- Minimal access. Even if you don’t have access to the code and the development team isn’t excited about helping you, you can still run a successful scan.

The Challenges of DAST

- Lack of business logic context. DAST tools usually have a superficial understanding of the app, which make it impossible to to detect business logic vulnerabilities, such as authorization and rate-limiting issues.

- Handle protection mechanisms. Since DAST tools generate HTTP calls from scratch, many of them fail to handle protection mechanisms that require custom code on the client side, such as CSRF protection, request signature, and proprietary authentication.

- Old technology. DAST tools were built for traditional web applications, where you could just use a web-spider to discover the app. Unfortunately, in the world of single page applications and mobile applications, you need a more sophisticated approach.

- Very noisy. By nature, DAST tools trigger many malicious HTTP requests. It’s very risky to run them on production, and they might also crash your pre-production environments.

I hope you enjoyed the article and learned something new today.

DAST is a fascinating technology, and if you want to learn more about it, I encourage you to use your favorite DAST tool, redirect its traffic to a web proxy (like Fiddler) and observe all the API calls it generates. I personally do it from time to time to learn about new exploitation techniques and payloads.

About the Author

Inon Shkedy is Head of Research at Traceable.AI and a co-author of the OWASP Top 10 for APIs.

About Traceable AI

Traceable helps companies secure their applications and APIs by providing complete visibility and risk assessment of APIs, their data flow, and transactions, and detecting and blocking attacks before they happen. Traceable does this with extremely low false positives by combining distributed tracing and cutting edge continuous unsupervised machine learning to rapidly identify anomalies in user and system behaviors. To learn more about Traceable you can visit us at https://traceable24dev.wpenginepowered.com or view a recorded demo.

The Inside Trace

Subscribe for expert insights on application security.

.avif)