Discover and Protect Generative AI APIs

APIs are powering the adoption of generative AI in the enterprise. Securely and safely integrating generative AI into applications starts with APIs.

Generative AI Introduces New Security Risks

Shadow AI

The rise of generative AI as-a-service has made it easier than every for organizations to add AI-powered features into applications. Generative AI introduces new risks, but product and application security teams are often left out of the loop on where and how AI is used. Gaining visibility into generative AI APIs is the first step to identifying shadow AI and laying the foundation for AI security and governance.

Sensitive Data Risk

Sensitive data loss is one of the top concerns of security and risk teams when it comes to generative AI adoption. Business users might share sensitive personal or business data with a third-party AI provider. LLMs might disclose sensitive data in their outputs either inadvertently or as a result of a successful prompt injection attack. Monitoring sensitive data flows to and from AI APIs

OWASP LLM Top 10

Generative AI is non-deterministic in nature and behaves differently from other systems in your applications. This introduces unique security risks to applications that leverage Generative AI to power features and experiences. The OWASP LLM Top 10 catalogs these unique vulnerabilities. It’s important to test Generative AI APIs to detect vulnerable implementations that could affect the security posture of your application.

APIs Power Generative AI Applications

APIs are the connective tissue of generative AI applications. Many organizations are incorporating generative AI to power customer-facing chatbots and internal user productivity applications. Typically, organizations are leveraging 3rd party models-as-a-service such as GPT-4 or Claude, but some organizations may use self-hosted models. User prompts and model responses pass through APIs, making APIs the natural trust boundary between users, application services, and AI models.

Discover and Inventory Every API

Securing Generative AI Starts with APIs

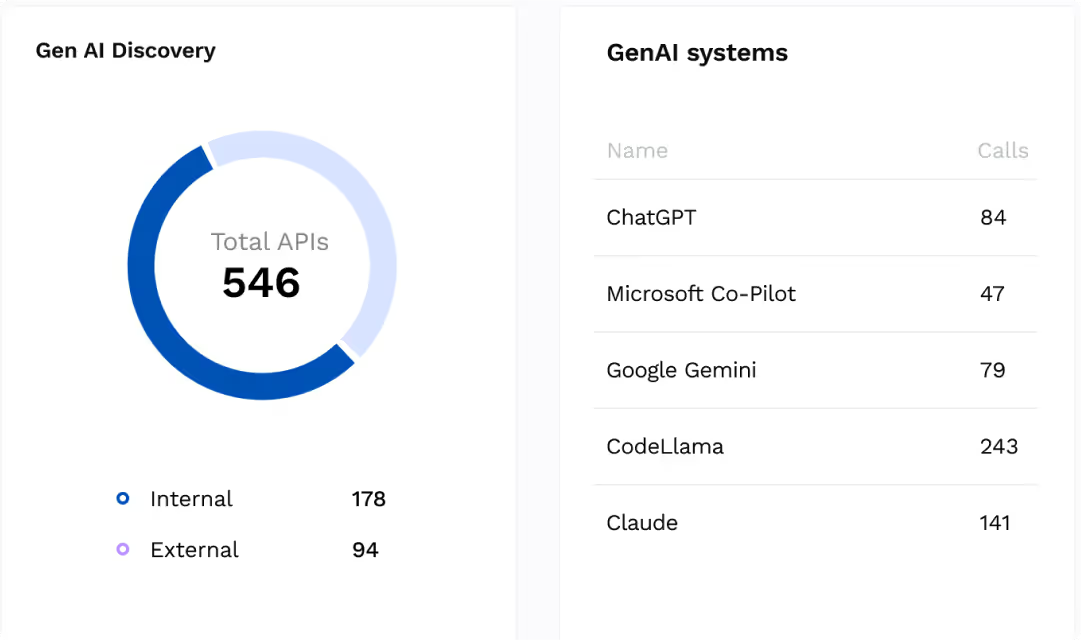

Discover Every AI API

Identify all APIs in your applications that call generative AI systems, whether they come from third party model providers like OpenAI and Anthropic, or from self-hosted systems. Know when new generative AI APIs come online, and monitor security posture in a consolidated Generative AI API Security dashboard.

Quantify API Risk

Automate and Scale API Vulnerability Testing

Track Sensitive Data Flows to AI APIs

Monitor what types of data are present in API requests and responses to and from generative AI systems. Identify and block sensitive data in requests and responses to prevent a data leak or compliance violation. Identify other data types such as code, foreign languages, or forbidden topics and set your on policies to restrict unwanted content.

Test AI APIs for Vulnerabilities

Automate testing across all your generative AI API endpoints to find and fix vulnerabilities and configuration issues. Test for common API vulnerabilities including the OWASP API Top 10 and AI-specific vulnerabilities such as OWASP LLM Top 10.

Detect and Block API Attacks, Fraud, and Abuse

.avif)

Automate and Scale API Vulnerability Testing

Detect and Block AI-Specific Threats

Detect and block attacks on AI applications including threats in the OWASP LLM Top 10. Prompt injection, sensitive data disclosure, and insecure output handling can be detected by examining generative AI API requests and responses.

See why security leaders in Public Sectors love Traceable

.avif)