Securing Generative AI-Enabled Applications: Crawl - Walk - Run Strategy

Buzz around generative AI security is at an all-time high, but most security pros are still in “listen and learn” mode when it comes to securing generative AI in their applications. This is a sensible approach. On the product and engineering side, many companies are still figuring out the use cases for gen AI in their applications and experimenting with different models and infrastructure. The market for gen AI models and supporting infra has been very dynamic in the last two years, providing a low resolution picture for anyone attempting to secure it. On top of that, product and application security teams are plenty busy securing their existing application infrastructure, configuring tests, and managing vulnerabilities.

According to Gartner, 80% of organizations will have used generative AI APIs or deployed generative AI-enabled applications by 2026. While securing generative AI-enabled applications may not be the first priority for product and application security teams today, it’s definitely on the horizon. So when is it time to get serious about securing generative AI in your applications, and what steps should you take? In this post, we’ll outline a Crawl - Walk - Run approach for product and application security pros when it comes to securing generative AI-enabled applications.

Crawl: Discover Generative AI in Your Applications

One of the main challenges for product and application security teams is keeping up with what the development team is doing. It’s no different when it comes to generative AI.

Fantasy World: Your company is building a new generative AI-enabled feature into your application. The engineering leaders meet with the product security team to discuss their approach. The product security team conducts a thorough design review and risk assessment, and tests the feature for vulnerabilities before it is released.

Reality: Your company is building a new generative AI-enabled feature into your application. The product security team finds out about it from a LinkedIn post from the CEO announcing that the feature is live.

Even worse reality: Your company is building a new generative AI-enabled feature into your application. It’s never announced and the product security team never finds out about it. The product team moves on and doesn’t maintain the feature, but there are still API calls from your application to the third party generative AI service.

To avoid this reality, the product security team needs visibility into where generative AI such as LLMs (large language models), is used in their applications and they need to get that visibility without relying on engineering to tell them about it. It’s cliche, but if you can’t see it you really can’t secure it. Visibility into where and how generative AI is implemented in applications will inform every other part of the organization’s generative AI security strategy, including whether or not any further action is needed.

The good news is, you can discover generative AI in your applications without immediately needing to adopt specialized generative AI security tools. In fact, visibility is a prerequisite for implementing most generative AI application security tools, not something the tool can provide on its own. The key is having visibility into your organization’s APIs. APIs connect generative AI systems to other application services. Whether your organization is using a third-party LLM model-as-a-service such as OpenAI’s GPT-4 or self-hosting a proprietary model, APIs are the connective tissue. Discovering and cataloging generative AI APIs is the first step to identifying where generative AI is present in your products and applications. A comprehensive API discovery tool should have the ability to discover all APIs across your products and applications, and automatically identify generative AI APIs based on the unique characteristics of generative AI API payloads.

Walk: Understand & Manage Your Generative AI API Security Posture

Once you've discovered where generative AI APIs are present in your applications, you can move to evaluating the security posture of those APIs. Standard API security best practices apply, such as ensuring proper authentication and authorization controls are implemented and enforced. You should continuously monitor and test all APIs in your applications so you can quickly respond to vulnerabilities and maintain a strong security posture. Generative AI APIs introduce an additional set of risks to applications, many of which are outlined in the OWASP LLM Top 10. These vulnerabilities are unique to generative AI and require additional design and testing considerations.

The unique risks posed by generative AI systems stem from the fact that LLM output is nondeterministic. This means that given the same input, LLMs can produce different outputs, making them a wild card inside of applications. LLMs are prone to manipulation (see prompt injection) and have a tendency to hallucinate (make stuff up). While model providers are constantly working to improve the quality and safety of models, product security professionals should default to considering LLM outputs untrustworthy. Overreliance and insecure output handling are OWASP LLM Top 10 risks that occur when organizations fail to implement proper trust boundaries around LLMs.

To address these unique challenges, product security teams should test generative AI APIs pre-deployment for API vulnerabilities and design flaws as well as generative AI specific risks. It’s possible to test resilience against OWASP LLM Top 10 risks like prompt injection and sensitive data disclosure by simulating manipulative LLM inputs and testing how the LLM responds. For LLM APIs that are already in production, you can begin to monitor API traffic to examine LLM inputs and outputs for occurrences of sensitive data, code, or other potentially risky payloads.

Run: Monitor and Protect Generative AI APIs at Runtime

You’ve discovered where generative AI APIs live in your applications and implemented mechanisms for continuous discovery of new generative AI APIs. You’ve evaluated the security posture of those generative AI APIs and initiated a testing program to uncover vulnerabilities and implementation flaws. With visibility into your generative AI attack surface and risks, it’s time to think about runtime protection. This involves implementing security controls that can monitor, detect, and respond to threats on your generative AI-enabled applications in real-time.

Because APIs are the connective tissue between generative AI models and other application services and end users, APIs are the natural implementation point for these security controls. If you can monitor API transactions, you can monitor all inputs to and outputs from LLMs. If you can block API transactions in-line, you can block a malicious prompt injection attack before it reaches the model, or block malicious code in an LLM output from reaching another application service.

By implementing runtime protection for generative AI APIs, you can ensure that your application and end users remain protected from the risks posed by generative AI, including sensitive data leaks and insecure output handling.

Get Started with Traceable’s Generative AI API Discovery

In May, Traceable announced the industry’s first Generative AI API Security solution. Generative AI API Security extends Traceable’s API Security Platform to offer specific protection for Generative AI APIs, including discovery, testing, and runtime protection.

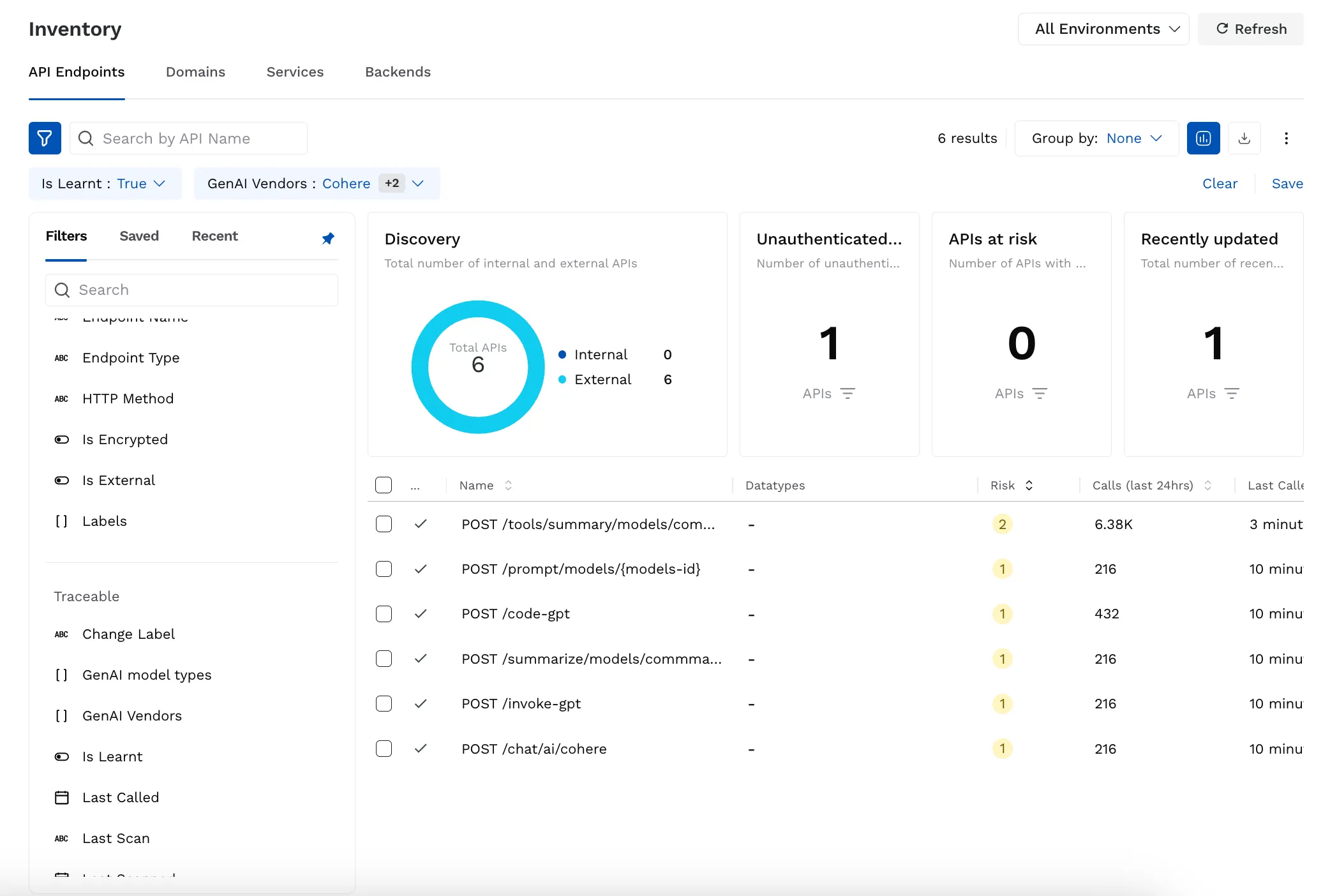

We are excited to share that discovery of generative AI APIs is now included for all Traceable customers as part of Traceable’s API Discovery & Cataloging capability. You can filter by "Gen AI vendors" or "Gen AI model" in your API catalog. We believe that maintaining a comprehensive inventory of all APIs in your organization provides the necessary foundation for managing your application security posture, and that visibility into generative AI APIs is a critical part of this. This capability is now automatically available for every Traceable customer, providing instant visibility into where generative AI APIs are interacting with your applications. Taking the first step into generative AI security is now easy. As you progress on the journey, Traceable can also provide the monitoring, posture management, testing, and runtime protection you need to feel confident that your applications are leveraging generative AI safely and securely.

The Inside Trace

Subscribe for expert insights on application security.

.avif)