Secure Kubernetes Architecture: 6 Factors Essential to Success

Kubernetes has become the go-to container orchestration tool for businesses.

Check out these telling stats [source: Enterpriser’s Project]:

- 84 percent of companies are using containers in production.

- Of those, 78 percent are using Kubernetes to manage their containers.

- 69 percent of companies have found security holes due to misconfiguration of their Kubernetes environment.

Your Kubernetes architecture is like a large house with container “tenants” living inside it. You’ll need to build it with care to make sure it’s a safe and happy place to live.

Here are six essential factors to get right when planning (or auditing) your Kubernetes architecture.

Setting the Foundation: Underlying Platform and Control Plane Configuration

The foundation of a house is a critical first step. A weak foundation leads to cracks over time.

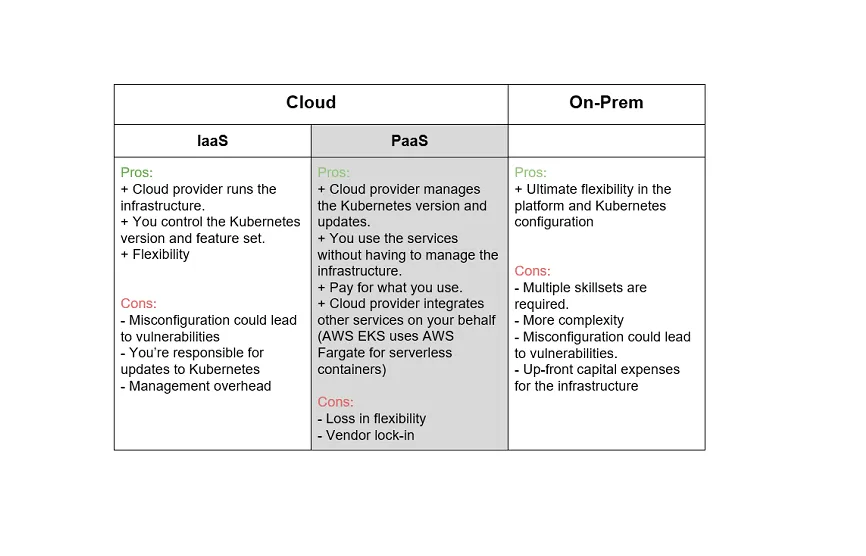

Similarly, where you install Kubernetes is your first choice. There are several options available, each with pros and cons.

Kubernetes is a cloud-native tool. Therefore, it makes sense to install it on the cloud. There are two cloud deployment options: Infrastructure as a Service (IAAS) or Platform as a Service (PAAS).

An IAAS installation involves creating a series of virtual machines in the cloud (for instance, using Amazon EC2) and installing Kubernetes on those machines. You’ll be responsible for updates and configuration of the Kubernetes installation.

On the other hand, all major cloud providers offer managed Kubernetes services. This deployment model allows you to use Kubernetes without having to handle the infrastructure.

Even though Kubernetes was made for the cloud, you don’t have to install it in the cloud. An on-prem installation is an option, with many companies choosing to use their virtualization infrastructure to host Kubernetes.

You’ll find a quick summary of the pros and cons of each choice below.

Your decision depends on three factors:

- The skillset of your team

- Your need for control and flexibility

- Your company’s goals

Control Plane Configuration

The Kubernetes Control Plane consists of the services run on the Master node. The cluster store, scheduler, controller manager, and API server combine to create the control plane.

Plan your control plane security well. If an attacker gains access to your control plane, they’ll be able to control your entire Kubernetes cluster. Use SSH or a VPN / VPC to connect to and use the control plane.

If you’re using a managed service from a cloud provider, protect your API keys and credentials. Don’t ever store them in a source control system. Losing your API keys would lead to a complete takeover of your cloud infrastructure, not only your Kubernetes installation.

Finding Good Tenants: Container Security and Composition Analysis

The wrong tenants can destroy your house. Similarly, dangerous containers can bring down your Kubernetes environment.

Make sure any containers you bring in from the outside are vetted and configured correctly. Only use trusted registries, such as Docker Hub or Quay.io.

Finally, scan your containers before using them. You can use open-source container scanners to find vulnerabilities and malware. You can integrate most scanners into your CI/CD pipeline.

Plan Your Rooms Wisely: Kubernetes Cluster Partitioning

The rooms in your house each have a different name and purpose. You don’t keep a stove inside your bedroom or a bed in the kitchen. You give tenants private rooms while allowing them to share common areas, such as the kitchen.

In Kubernetes, namespaces allow you to separate various applications within the same cluster logically. If the cluster is your house, each namespace is a separate room within it.

Give namespaces to each application within your cluster. This practice produces three benefits:

- Logically separates applications and environments (Development, Staging, Production)

- Allows for robust Role-Based Access Control (RBAC)

- Allows for network partitioning for added security within the cluster

Kubernetes RBAC Primer

You can check out the Kubernetes docs for comprehensive explanations of RBAC within Kubernetes. But here’s a quick primer for those who don’t have the time to read it now.

Kubernetes has Roles and ClusterRoles. They contain rules that represent a set of permissions. There are no “deny” rules, so you have to know what access is necessary.

Each Role is scoped to a specific namespace. ClusterRoles, on the other hand, have no namespace. In Kubernetes, an object can only be namespaced or not; it can’t be both. Therefore it’s necessary to have two resources, one for each scenario.

Use Roles to create permissions tied to a specific application. Use ClusterRoles for access to cluster-scoped resources or resources used by multiple namespaces.

RoleBinding and ClusterRoleBinding is the act of assigning permissions to a specific user. A RoleBinding grants permissions within a single namespace while a ClusterRoleBinding grants access cluster-wide.

For example, assigning the “pod-reader” Role to the user “jane” in the “default” namespace allows “jane” to read pods in the “default” namespace.

Roles define a set of permissions. Then users, groups, or service accounts (collectively called “subjects”) are assigned roles to grant access to specific resources within the cluster.

Define network partitioning and access control appropriate for the business’s needs before deploying any application into your cluster.

Watch Your Wiring: Inter-Pod Communications

Shoddy wiring is more than an inconvenience. It can lead to damaging fires or even destroy the entire house.

Similarly, poor inter-pod communications can bring your Kubernetes cluster grinding to a halt.

A Kubernetes environment is a small network ecosystem. The same network topologies, protocols, and policies govern pod-to-pod communication as those you’d find in any corporate network.

A full discussion of Kubernetes networking is beyond the scope of this article. You can read up on it using the Kubernetes networking documentation. But here are some quick pointers.

You can use Service Topology to define how traffic flows to and from a particular service. For example, a service can specify that traffic should be routed to endpoints on the same node as the client.

Service Topology is useful in the public cloud. You can use it to keep a service’s traffic in a particular availability zone or region to reduce latency.

Another useful networking tool is NetworkPolicies. These application-level constructs specify how a pod is allowed to communicate with other entities. This feature is especially useful when you want to control network traffic using IP addresses or ports.

Finally, you need to have a robust authentication and authorization strategy. We recommend using a service mesh to simplify load balancing, service discovery, encryption, and authentication. For example, service meshes include support for OAuth, which makes service authorization much easier to manage.

Secure the Front Door: Ingress Architecture

There’s no point in building a secure home and then leaving the front door unlocked. You don’t want to do that with your Kubernetes cluster either.

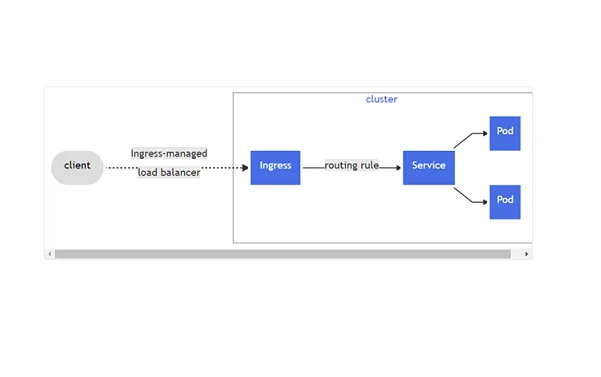

In Kubernetes, Ingress exposes HTTP and HTTPS routes from outside the cluster to your services within it. Ingress is your environment’s “front door.”

[source: Kubernetes docs]

Carefully consider which server to use in your Ingress architecture. NGINX is a tried and true web server with all of the security features necessary to protect your services. NGINX now offers an Ingress Controller for cloud-native Kubernetes environments.

However, you may want to give Envoy a look. It’s an open-source edge and service proxy built by Lyft specifically for cloud-native applications. It tackles the common observability and network debugging issues common in a microservice architecture. Envoy can also serve as a service mesh.

As the “front door” to your cluster, the choice of an Ingress server also impacts security. You can use it to terminate TLS connections from clients before they access your services. Be sure to include a key management strategy, including a Key Management Service or another secure vault.

Remember to follow the same security practices for your Kubernetes cluster as you do for any other part of your network infrastructure. Watch for OWASP Top 10 and OWASP API Top 10 vulnerabilities as well as logic-based threats.

(Hint: Traceable watches for common and logic-based vulnerabilities by learning the business logic of your microservice applications. Check it out for yourself here).

Finally, make sure your ingress plays nicely with mobile and IoT devices if you serve those clients.

Install Security Cameras: Secure and Monitor Microservice Communications

Video doorbells and security cameras are becoming commonplace. Homeowners want to know what is happening in and around their house.

Securing and monitoring within your Kubernetes cluster is equally critical. Make sure that microservice communications are protected, and you know what requests are being sent between services.

Consider point-to-point encryption between all microservices. And ensure real-time API monitoring for both REST and gRPC protocols.

Zero Trust principles not are becoming more popular as new technologies are appearing that support it. Zero Trust is an operating model where no traffic is trusted by default, inside or outside your network.

Within Kubernetes, implementing this model means verifying every request, client, or object trying to connect to your services. Nothing happens within your cluster unless the user or service account has proven that they have permission to act.

Zero Trust is essential to cloud-native security. At any moment, dozens or hundreds of services are trying to perform actions and share information. Zero Trust ensures that every request is validated and there are no holes due to the assumption that internal traffic is automatically safe.

Protect Your House

The world of microservices and cloud-native architecture unlock countless opportunities for businesses today. But new technologies also come with new dangers; new opportunities for malicious actors to steal sensitive information.

We’ve discussed six essential factors for securing your Kubernetes architecture:

1. Choosing the underlying platform and control plan configuration

2. Vetting and securing the containers running in your environment

3. Implementing cluster partitioning and access control

4. Defining inter-pod communications

5. Choosing your Ingress architecture (protecting your “front door”)

6. Securing and monitoring your microservice communications

With these factors in mind, what should your next steps be?

If you have an existing Kubernetes installation, use these factors as a mini-audit. Review your current policies and see how well they match up in these six areas.

If you’re planning your first Kubernetes installation, use these factors as a guide to making sure you cover all of your bases. Meet with implementers and make sure you have a plan for all of these factors.

Then your Kubernetes environment will be a happy home for your microservices.

The Inside Trace

Subscribe for expert insights on application security.

.avif)